Yesterday, Iwas at the Google Search Central Live in Zurich. Much has been said. So here is a recap !

PS : in the end, I’ll share the checklist SEO / GEO as given by Google.

Dans cet article

A Google core update to arrive before the end of 2025

Beforeentering into the details of his keynote :

John has left us understand that a new core update will be deployed before the end of the year. To end the year in beauty… finally, I hope.

He also pointed out that Google regularly small core updates that they don’t necessarily always talk. Interesting…

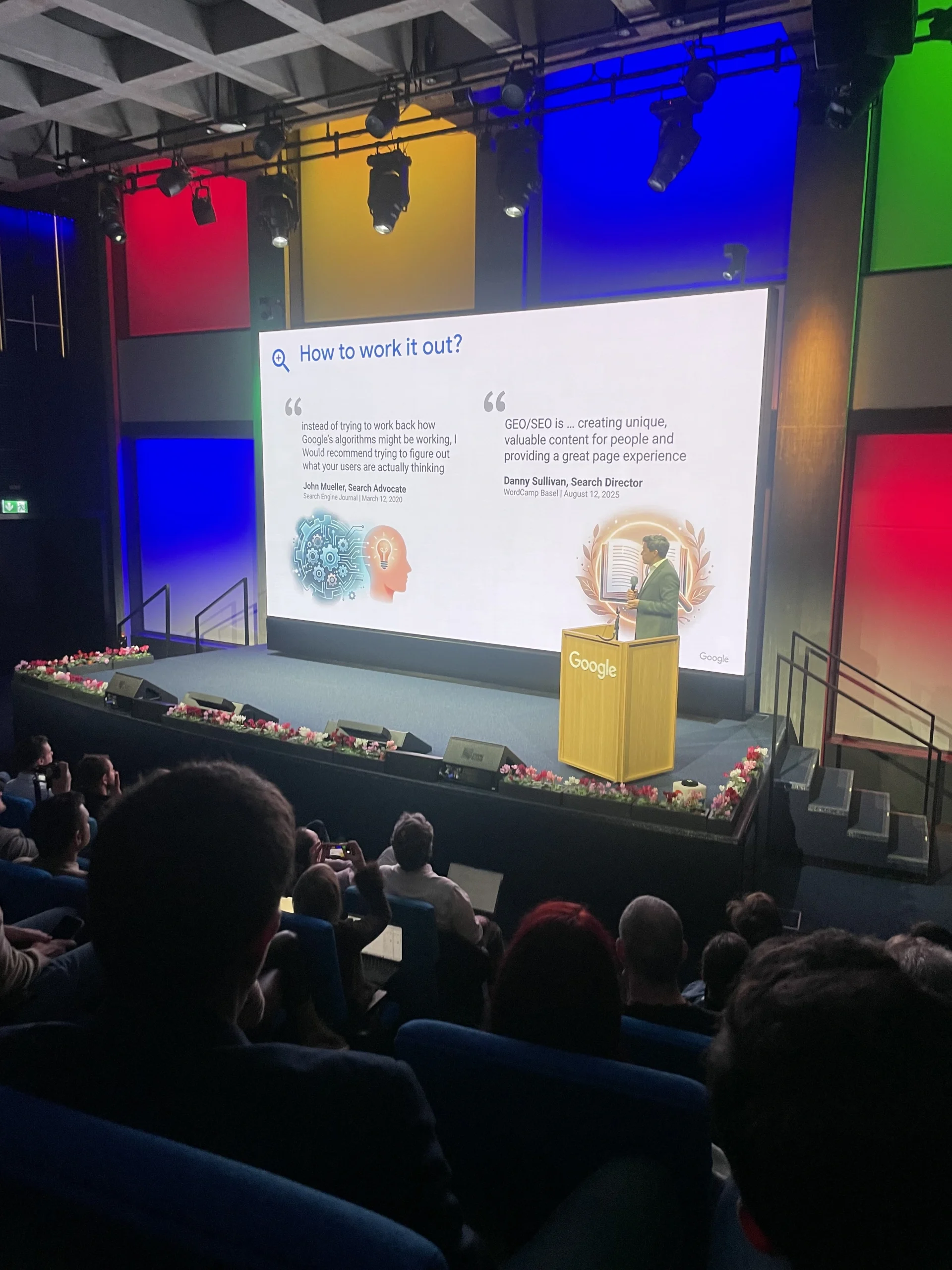

John Mueller : Good GEO is GOOD for SEO

John Mueller is aligned with Danny Sullivan : ‘ Good GEO is Good SEO ‘.

In theory, this is true. On the field, it is more complicated.

With all the excitement around the GEO, say to a client that GEO is really just a form of SEO…

It can make you lose a contract. Paradoxical, but real.

After that, I understand why he said it. From a technical point of view (and simplistic), he’s right : ‘VE Overviews and the Mode IA rely directly on the Google index.

So yes, it’s necessary… but clearly not enough.

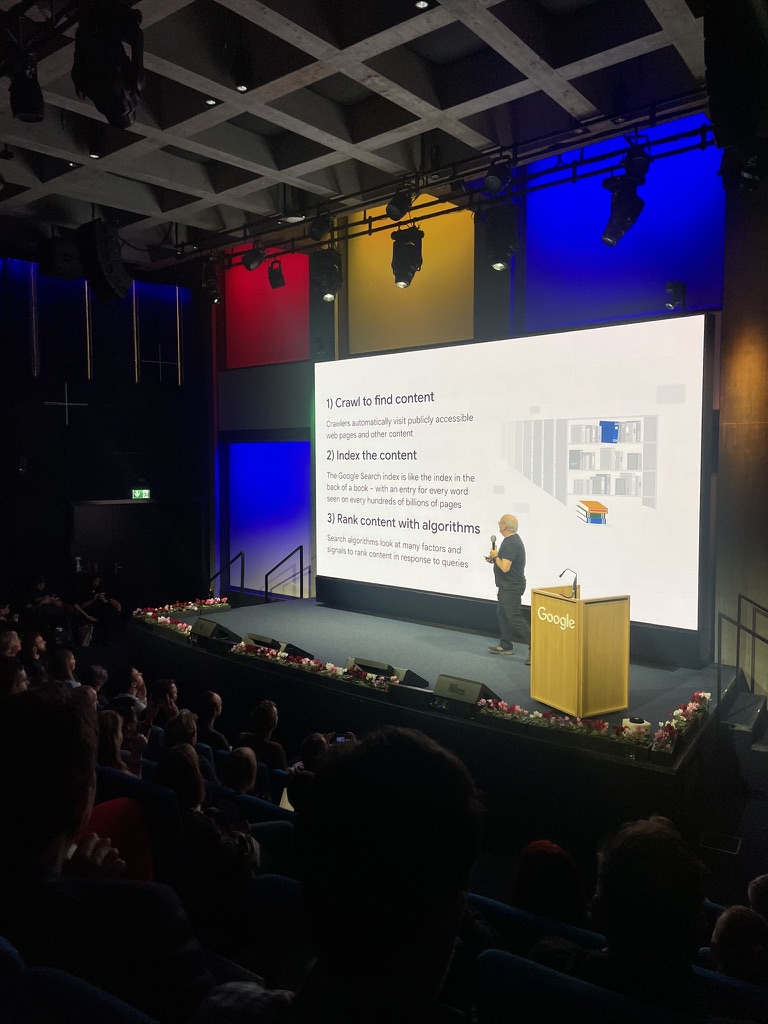

Crawl, Crawl, Crawl

The image doesn’t really reflect what John was saying.

Him, he insisted on the Crawl. Really stressed.

For him : Google crawl a lot. Google crawl too, even.

And the winners of the next year will be those who :

- include the crawl,

- optimize for the crawl,

- and re-optimize for the front crawl.

- Optimizes the budget of the crawl, to avoid a crawl too massive

Google wants it facilitates the work.

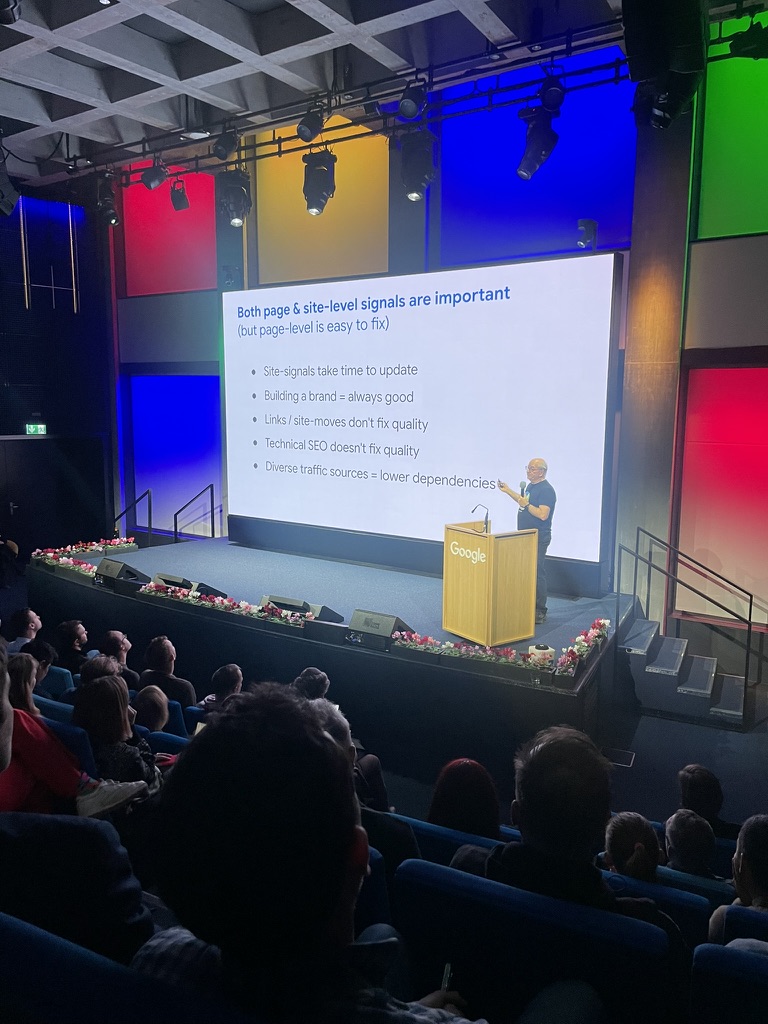

Authority site / authority pages

I admit that I was a little surprised.

John has spent years denying the concept of “website authority” or authority site…

And there, in the keynote, he speaks ofglobal authority, as if it was totally normal.

It feels a little glow-up in communication.

Probably the combined effect of the Content Leaks + of the Antitrust trial of the DOJ.

Necessarily, this requires re-align your speech with the technical reality.

Otherwise, side recommendations, nothing unusual.

Except for one small detail that stings a little, especially for me, SEO technique :

Google admits, in a hollow, that the optimizations techniques have not really make sense for the big sites.

Wholesale :

on small sites, it is useful… but not “game changer”.

On the behemoths, then yes, each micro-optimization can move mountains.

Not the speech that we hear often, but at least it’s honest.

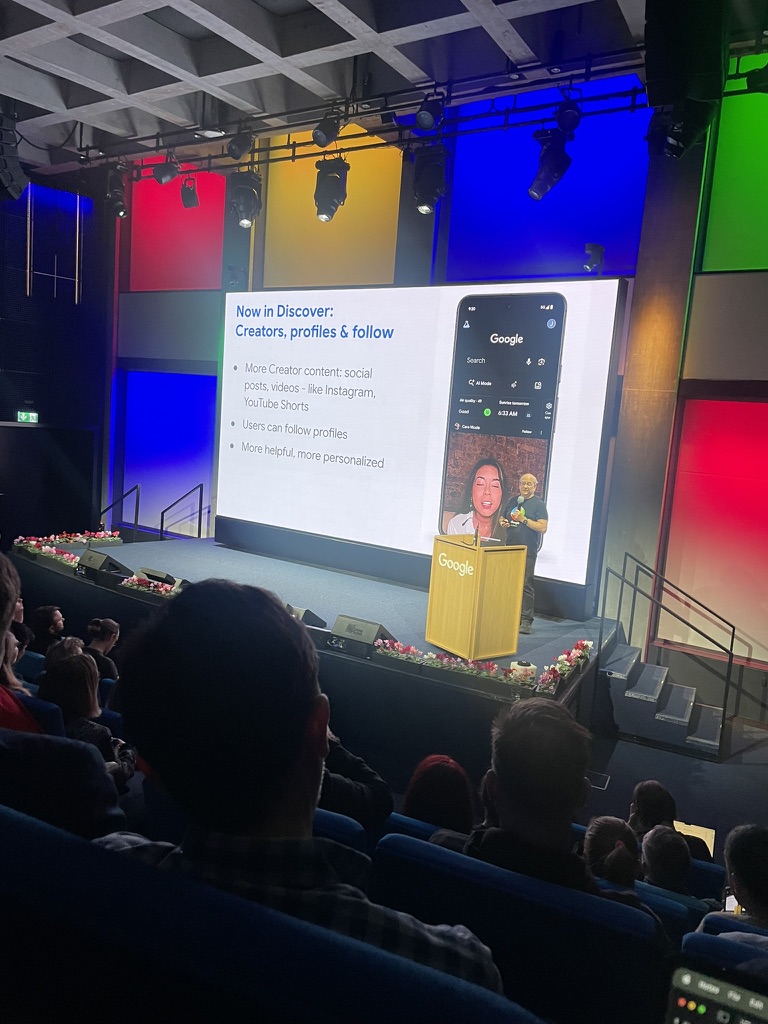

Diversification and research multimodal

John Mueller and Martin Splitt have both insisted on one point : diversify.

When Google tells you not to put all your eggs in one basket… it is really hot.

Of course, their official version, it is : “Invest in Discover, News, etc”

But the actual message, it was all figured out : we enter a period where the SEO alone is not enough.

It should be everywhere. It is necessary to make the multi-modal.

And it is fully consistent with the increasing integration of social networks in the vision of Google :the web is no longer just the blue pages, it is social, video, news, discover, multimedia, of the conversation.

Google is aware of this. We follow…

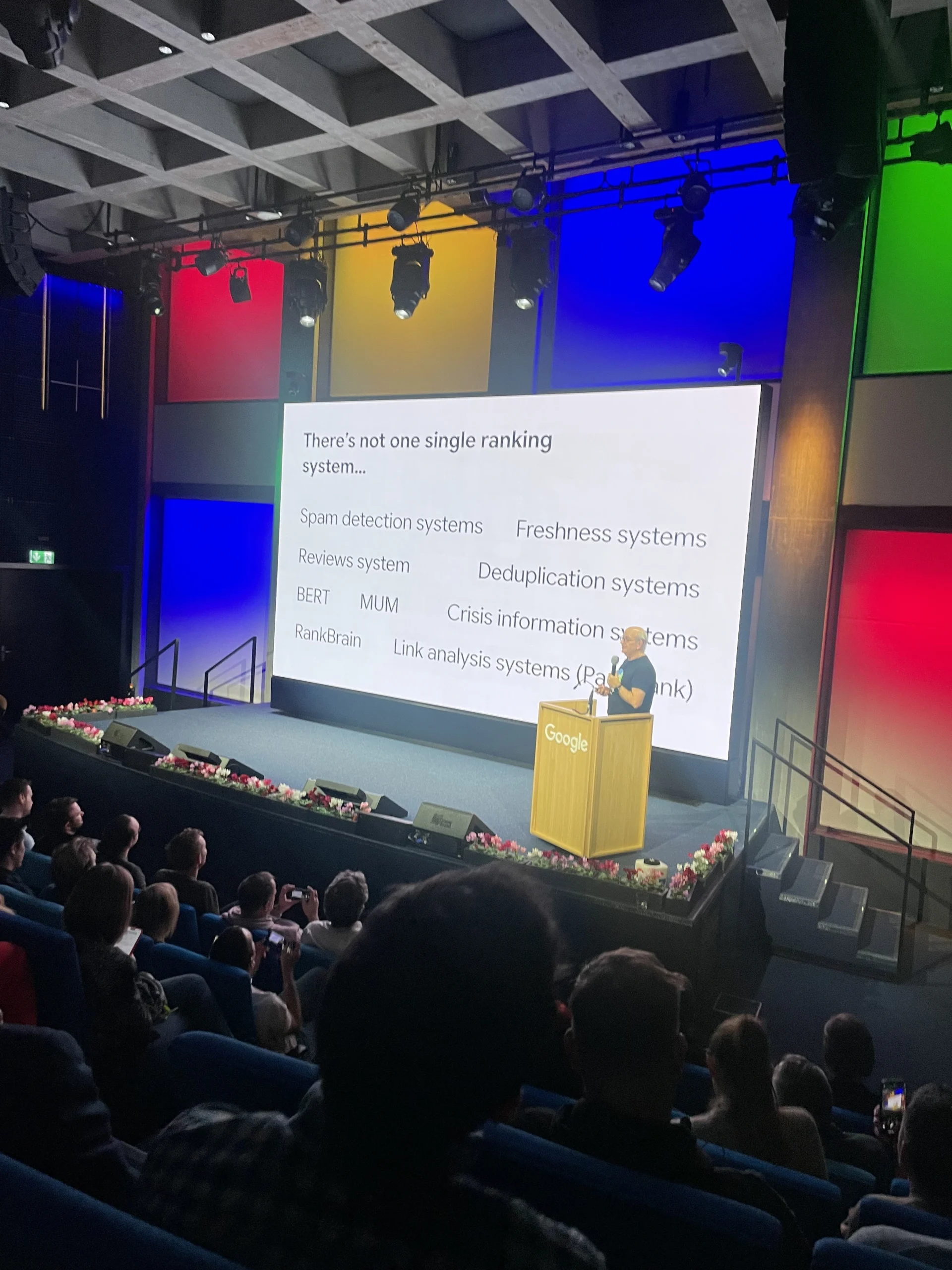

Rank Brain, page rank, spam policy, Mum

Always in the paradox : John talked a lot about the past… to speak of the future.

He traced the line of the fundamentals : RankBrain, PageRank, the detection of spam in that order.

Then he followed that up with BERT, MUM and systems of Freshness.

The rest was displayed on the slide… but he didn’t say a word.

It was pretty clear, you need to know about and master the base, before moving on to other things !

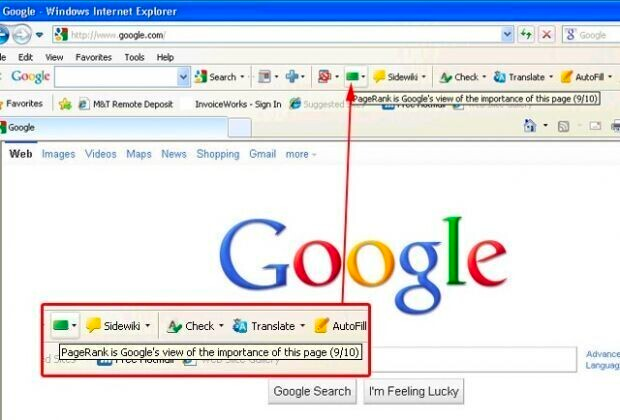

What is interesting is the question he had launched just after :

‘Is it that you have already seen the PageRank of your own eyes ? ‘

A small picnic to the time when Google had the PageRank right under the search bar.

Yes, Google was more transparent before. Times have changed.

Is this a feature like that could come back ?

On the Search Console can be ?

They have announced a lot of new features were going to happen… so who knows.

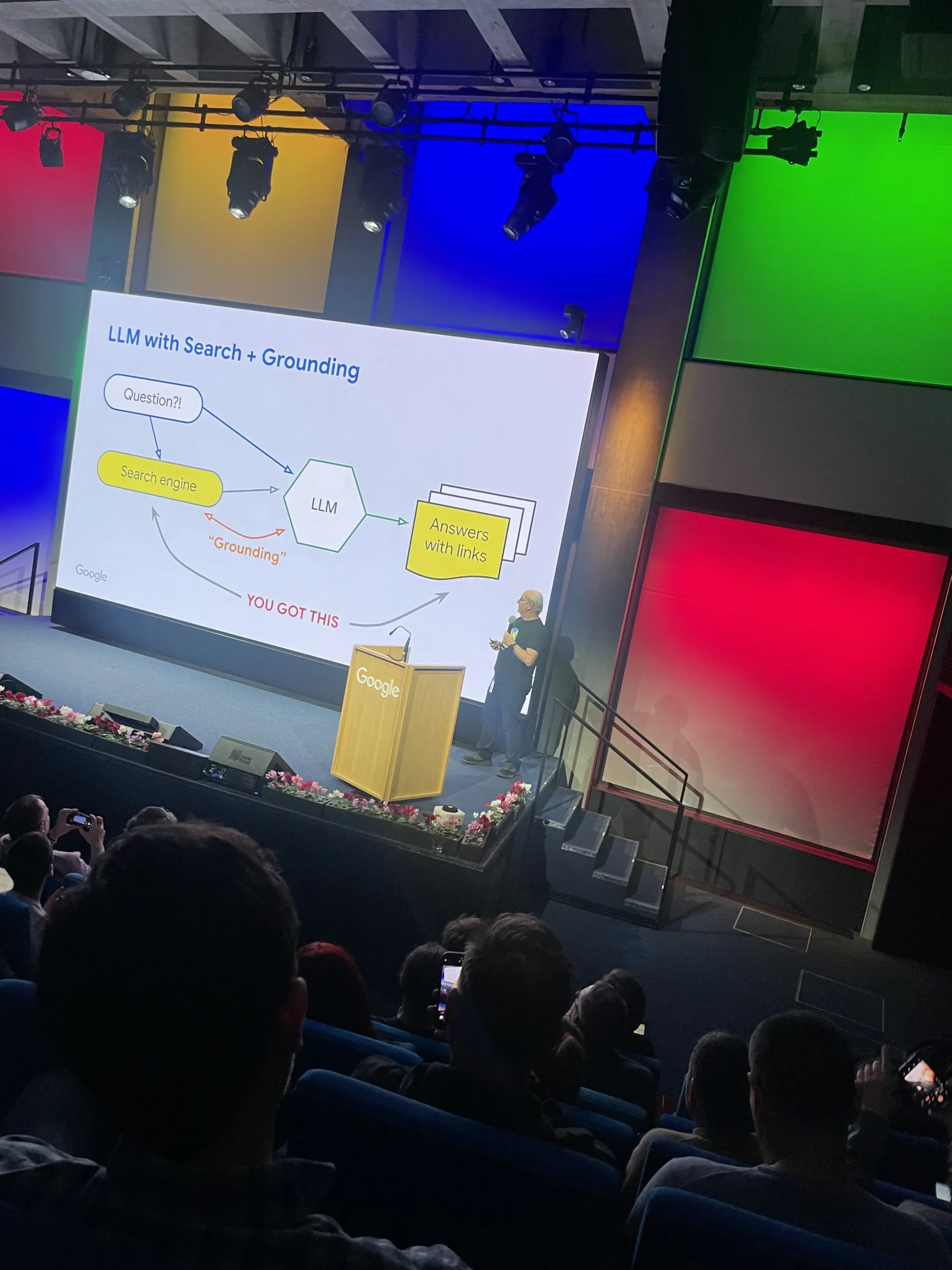

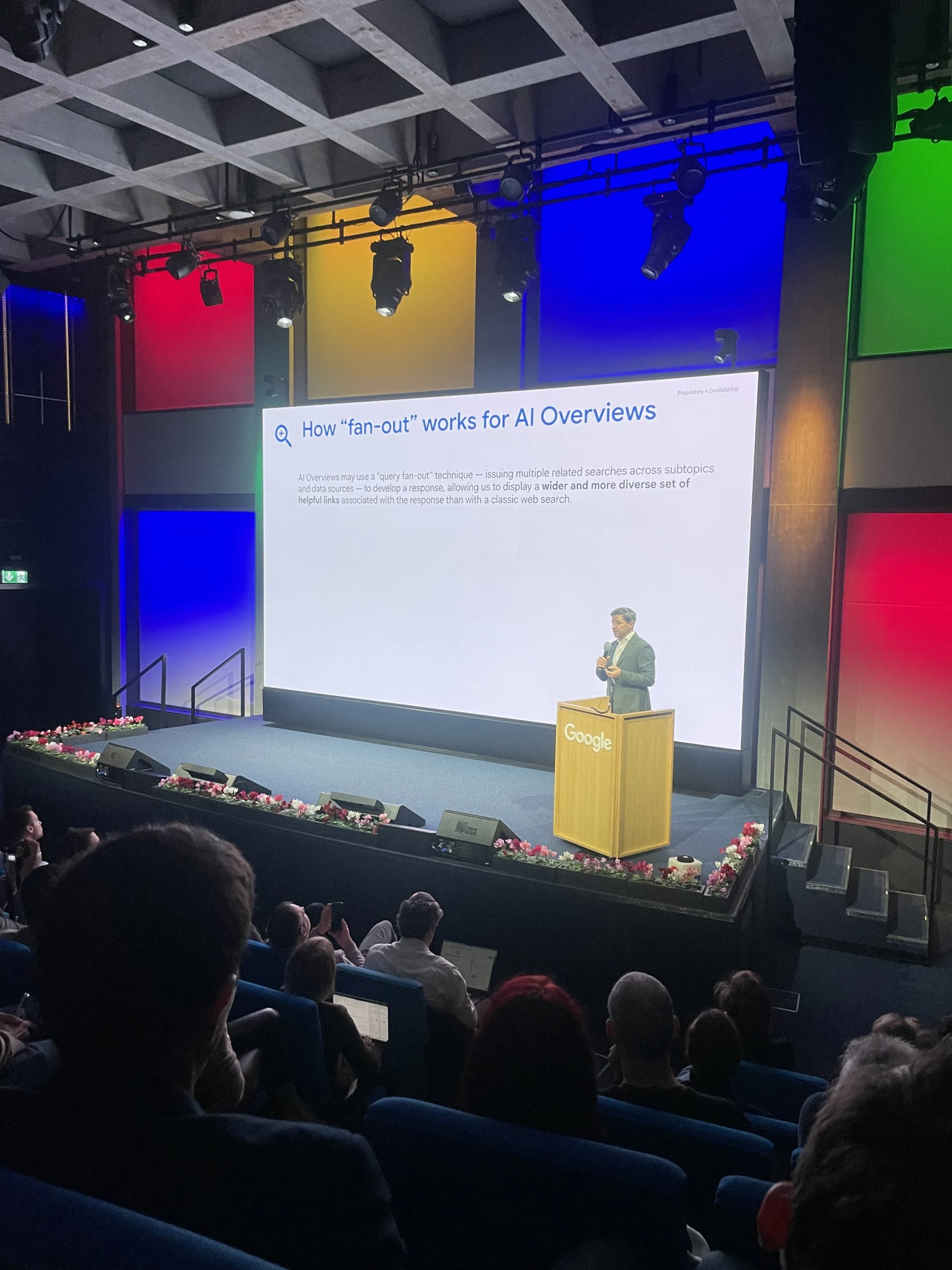

Query Fan Out : ‘VE Overviews

We knew that Gemini, ‘VE Overviews and IA Mode, use the query fan out, but it is always reassuring to have confirmation from Google. Something made by Nikola Todorovic, Director-Google Search Zurich.

Nicolas has therefore addressed the subject (briefly), in explaining the method and its implications.

Optimize for Query Fan Out

For optimization techniques, we had the famous “Good GEO is Good SEO”.

But Nicolas also mentioned two other key approaches :

- UX (user experience) – making the site clear, fluid, and engaging

- Chunking – the structure of the content pieces that are easy to understand for the AI and the users

Google Search Console

Data’VE overviews / IA Mode

Everyone has asked the question. Everyone got the same response : no new, no projected date for the reports’VE Overviews / IA Mode in the search console for the time being.

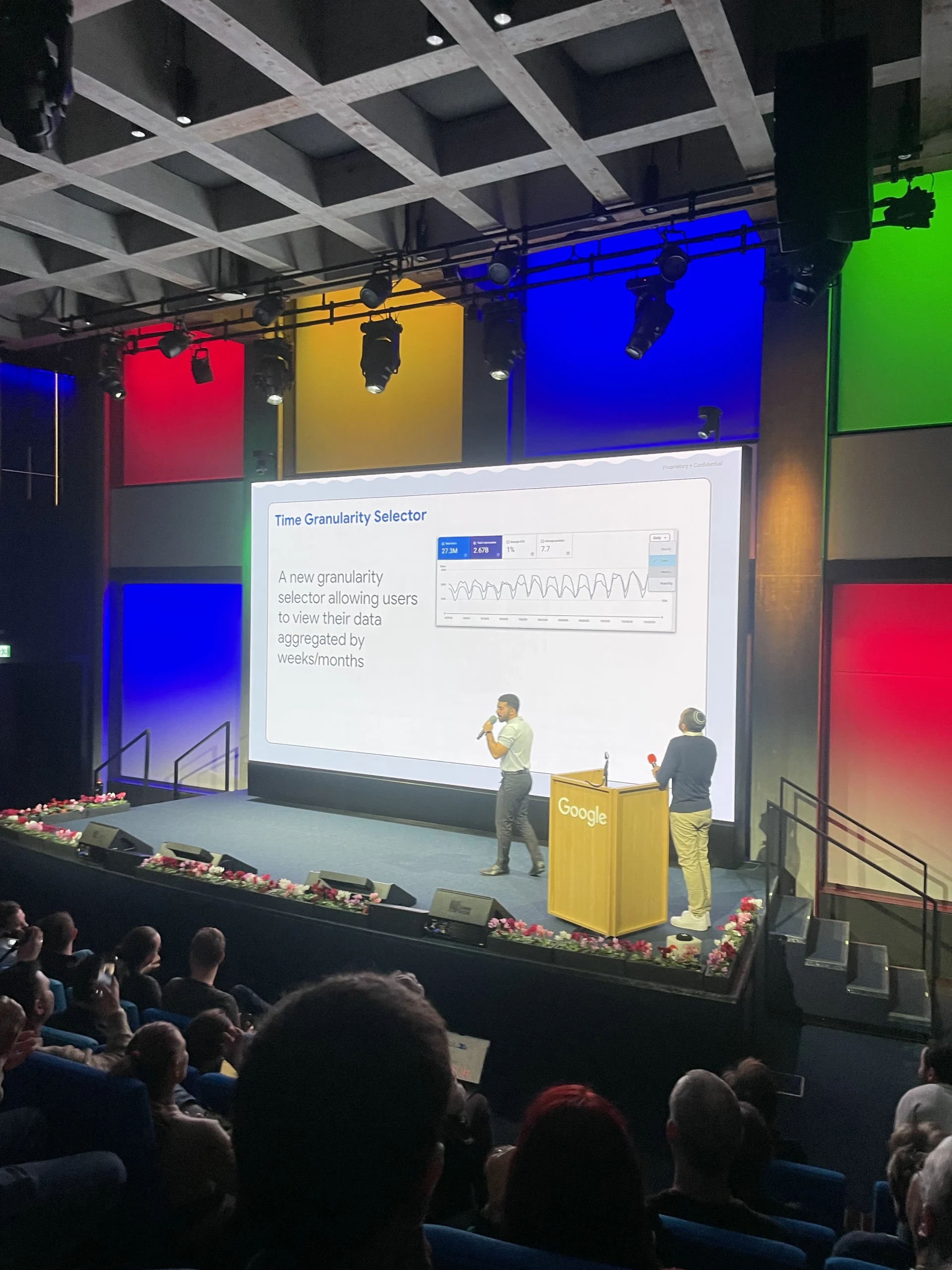

The views weekly and monthly.

During the conference we presented an exclusive report on the performance of the Search Console : the views weekly and monthly.

Concretely, it allows you to adjust the temporal aggregation of any graph of performance, and thus smooth out the daily fluctuations.

PS : New features, yes… but it really should be that they stop with the delays in reporting and indexing performance !

New features in Search Console

Integration of social networks, query groups, structured data, and built-in… you have understood, the Search Console is evolving to better meet the needs (or perhaps to soothe a bit of the collective anger ?).

Apparently, new features based on AI are also coming.

‘t wait to see them in action…

Crawl → Index → Serve as : what Search Console really reveals

The teams Search Console recalled a fundamental point : the Google Search Console is the only tool we really need to diagnose your problems, SEO. JI want to say yes and no…

Crawling : The discovery of your URL

All that is left : Sitemaps, Crawl stats, Settings… This is the part that tells us if Google finds us and sees correctly thatyou want to see it.

- Sitemap misconfigured or ignored

- Crawl errors

- Resources blocked robots.txt, 403, 404…)

- Discovery issues URL

Indexing : The wall is the most important

The central block : Pages, Video, Sitemaps, Removals…

It is here thatwe understand why it is not in the index.

We benchmark :

- Soft 404

- “Alternate page with canonical (but not yours 🤡)

- Server errors

- Pages blocked by accident

- Videos not indexable

Serving : What Google is for users

The column on the right : Performance, Search Results, Discover, Videos, Enhancements, HTTPS, CWV…

It is here thatwe see the real impact on the user side.

- CTR, positions, print

- Discover (always a psychological thriller)

- Rich results

- The videos detected/served

- Core Web Vital

- HTTPS / security

- Improvements structured (Breadcrumb, Product, FAQ, etc)

Google Discover

Andy Almeida, Google Discover talks of theuse of PBNs and expired domains to Discover, on the condition that the area has a good history research.

- Resurgence of the black hat : cloaking, keyword-stuffing… Not necessarily the best technique for raker.

- The content of synthetic generated by the AI is not yet considered to be of good quality.

- The images created by AI problem ; Google Discover attention.

Optimize for Google Discover : Remedies and good practices

- The fight against spam

- Align the content with the search

- Improve the flow

- The usefulness in research is paramount

- Maintain a regular presence in Discover

- Choose a niche and create inspiring content

- Repeat : niche, niche, niche

- UGC video and (+ integration of social networks in Search Console)

- To maintain the quality on all of the sites controlled (signals of the property ?)

- Possibility to create Discover for e-commerce

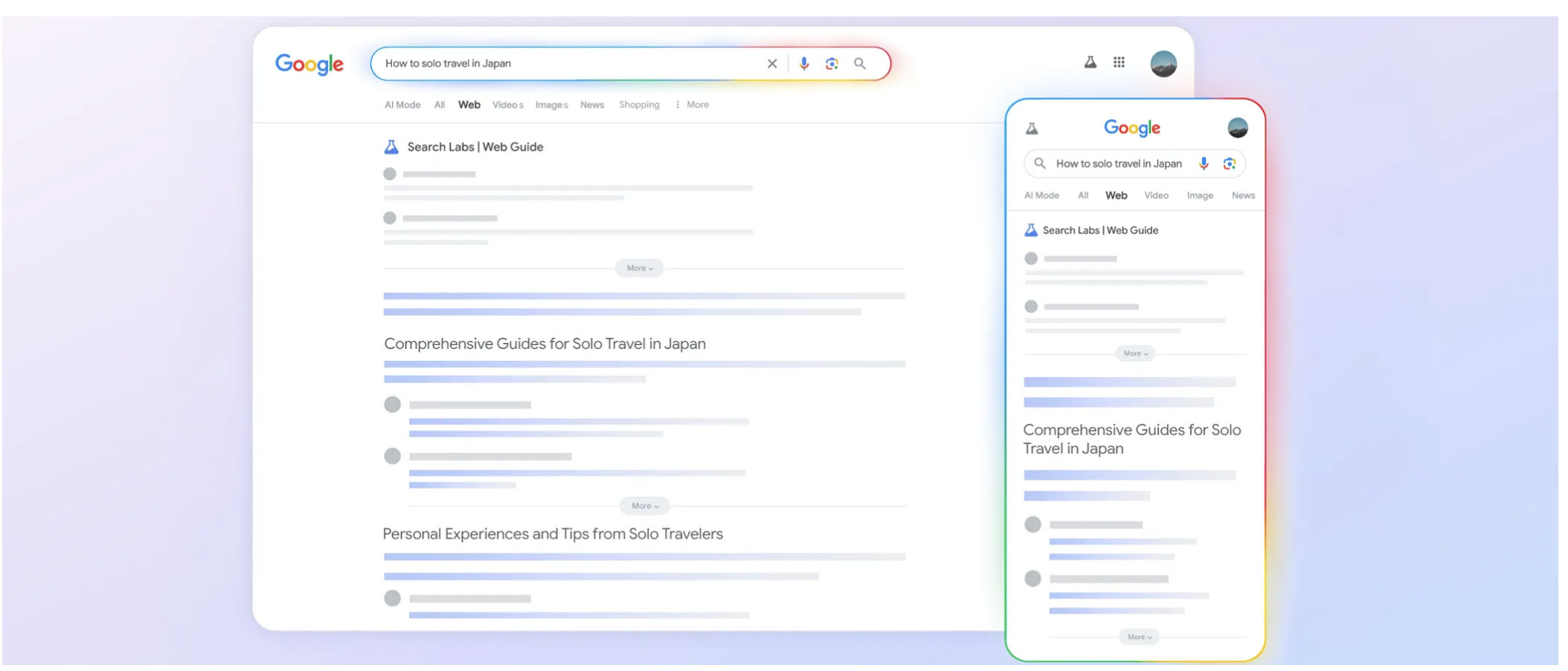

Google Web Guide : thealternative AI that nobody talks about

Google Web Guide is theother feature IA (experimental) that reorders search results with the AI on the SERPS. Rather than just give a list of standard links.

How does it work ?

- It uses a customized version of Gemini HAVE to understand both your application and the web content.

- Instead of the traditional list of links, Web Guide to group the results by relevant topics.

- It may be particularly useful for open searches or complex, even those made in several sentences.

- Under the hood, it uses thees query fan‑out too, with a number of related research to generate more relevant results (similar to AI Mode).

Site Kit, Website kit, website Kit

John Mueller has spoken of Site Kit, Nicolas (the director of Google Zurich) has spoken of the site, and Maria (the woman behind Site Kit in Zurich) has spoken of Site Kit.

The way they did give me the impression that this is not fair to the com.

Maybe they are trying to pass a message… but I am wrong, surely.

Website link kit

PS : For those who don’t know, Site Kit is the plugin Google that allows you to configure Analytics, Search Console, etc, directly from WordPress, without touching the technique.

When you think about it, it is really practical. Most of all, it displays dashboards understandable for the customers, directly on their site, without drowning in a thousand dashboards.

Personally, I don’t advised very little. I have always been cautious about its potential impact on the performance of the sites.

But here… I installed it on my own site, and I will now recommend. You never know.

For info, Google also offers plugins for other CMS, but they rely a lot on Site Kit since WordPress remains the CMS dominant.

E – commerce and structured data

Implementation of structured data

Theimplementation dynamics of structured data in organic search is still doable. Google analyzes the data, even if they are generated via JavaScript.

But be careful : if the raw data (which is in the code) are different from a lot of the data that has been rendered (which appears after the execution of the JS), it may pose a problem,. consistency is key.

In addition, n’t forget that the rendering JS remains costly for Google.

The rendering of JS remains expensive and (slow) to Google. But n’t forget that we are heavily dependent on the caching .

Martin Splitt

Implementation of dynamics of structured data in E commerce

For the E-commerce / Shopping, Martin Splitt yet fervent defender of the JS (dev training and spokesperson for the JS SEO at Google) is categorical : it is not recommended.

- Google makes many crawlers to retrieve sensitive information to the factor of time (price, availability, etc.) sites E commerce.

- Rendering JS is costly and slow, which can slow down the crawling and delay the update of the data.

- Only the main element is returned, so some information may not be taken into account.

- If the structured data are not available in their raw form, they are likely to be ignored by Google.

In summary :

- For the SEO, classic, structured data dynamically via JS may be thecase, but it is still expensive in resources and can create inconsistencies.

- The caching of data returned may be an intermediate solution to improve the performance and consistency.

- In e-commerce, where information needs to be always up to date, opt for structured data directly in the code, without relying only on the JS.

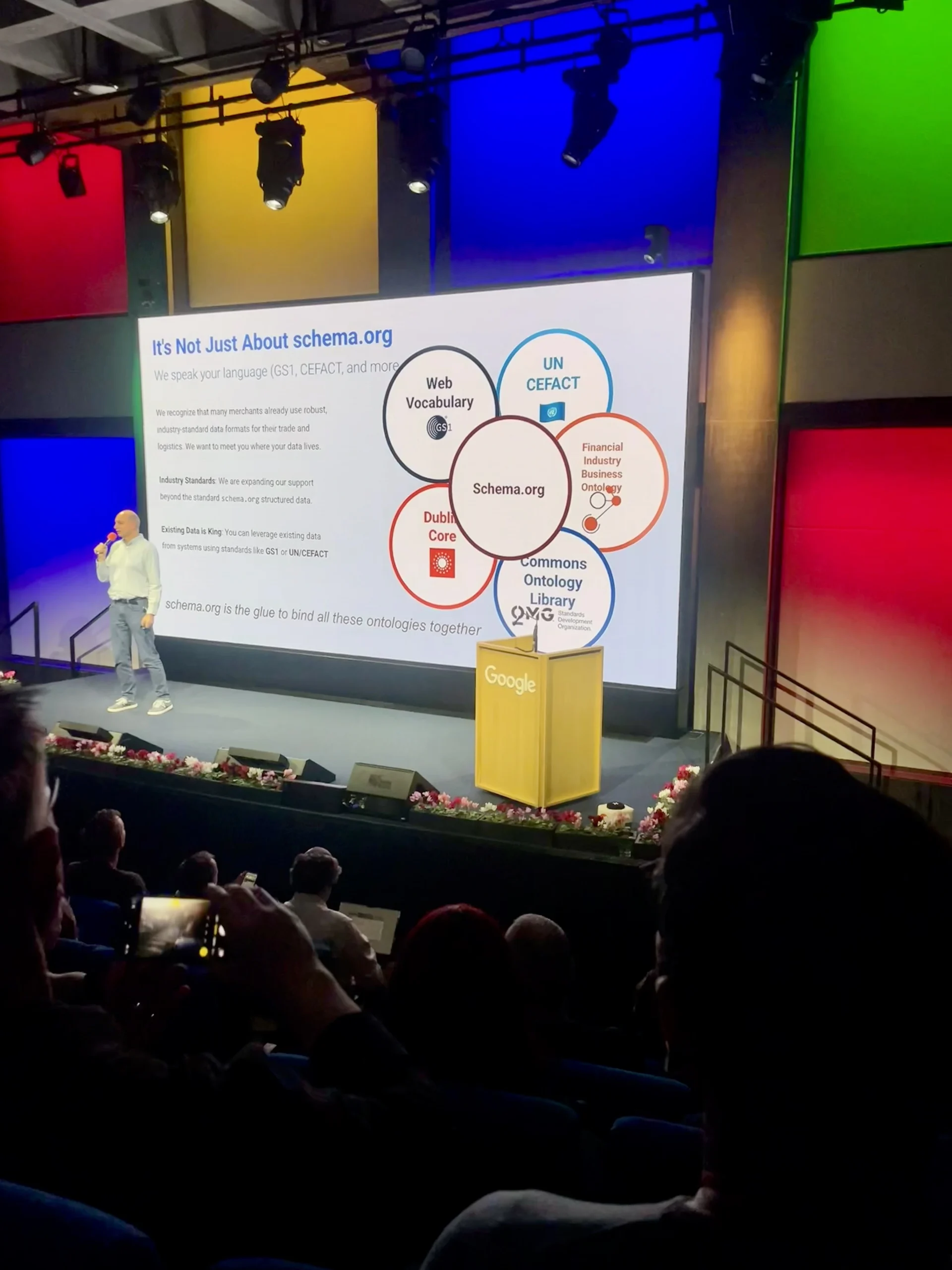

Standards of structured data : Pascal Fleury

We often speak of Schema.org, which is logical since it is a bit to become the de facto standard for structured data.

That said, Pascal Fleury puts forward other standards available, which can be useful in many cases.

The idea is not to replace Schema.orgbut the supplement to enrich and clarify the data.

Example :

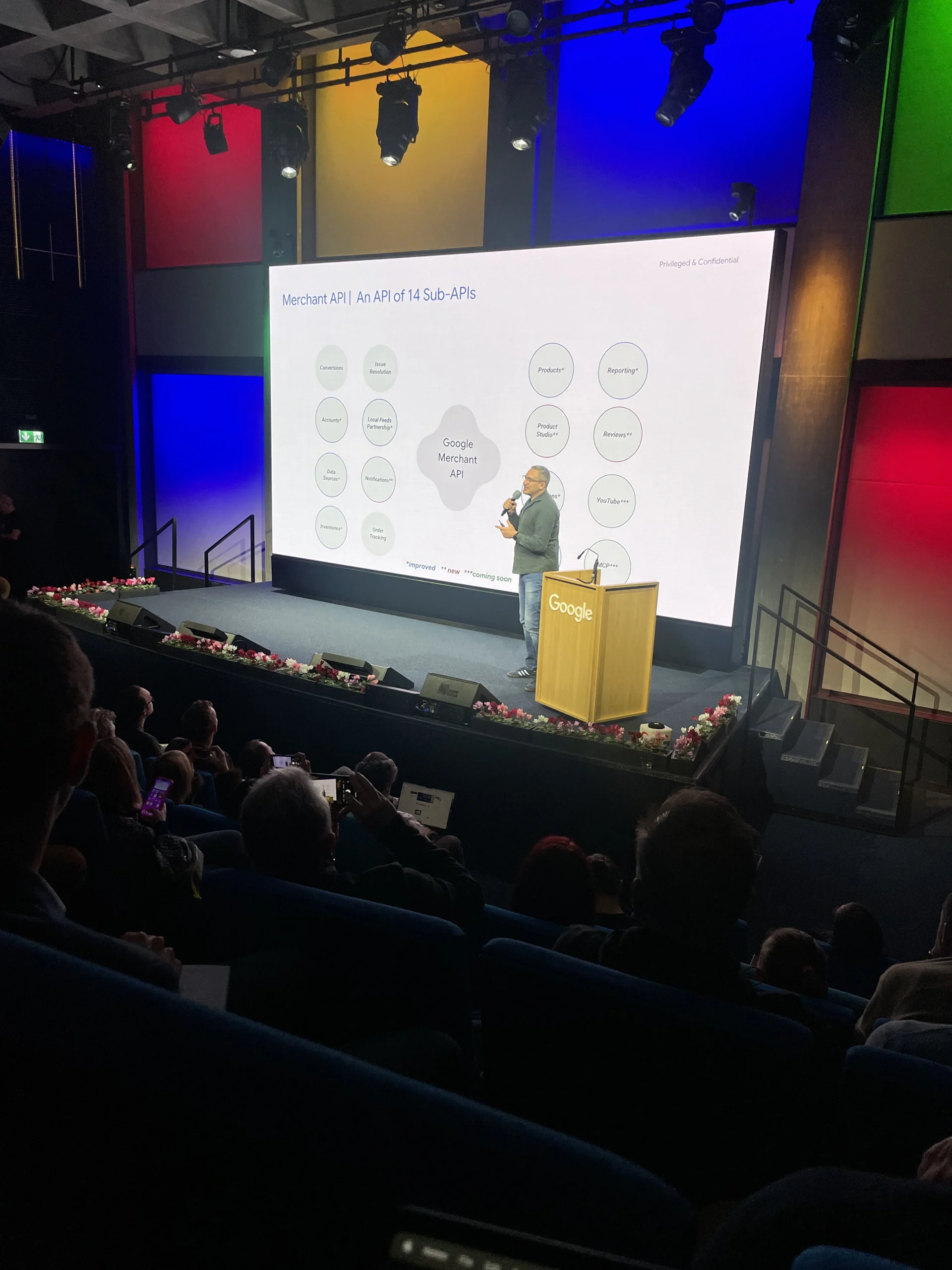

API Google merchant center

During the conference, we were introduced to thea new API Merchant Center, which is the successor to the Content API for Shopping.

It now consists of several sub-APIS, allowing you to better manage and organize the flow of data and products (e-commerce.

Specifically, theapi allows you to :

- Manage how your business and your products to appear on Google.

- Browse by programming to the data, insights and unique features.

- Expand your business and touch more customers on Google.

The Utility before any

The SEO to the old (keyword-stuffing, optimization, artificial) is death.

- Google now requires that the content to be useful.

- For sensitive topics (YMYL – Your Money Your Life), the authority and reliability are essential.

- Google uses white lists of reliable sites for these topics.

- If you don’t, your content is virtually invisible.

For the anecdote, when I’ve seen cloakroom, jI immediately thought of ‘ cloaking ‘, the technique of the black hat.

After the Sandbox at Google Paris… seriously, what is it delirium with these names mysterious at Google ? Always intriguing, always a little geeky.

Theywould not be able to choose a term that is more simple ?

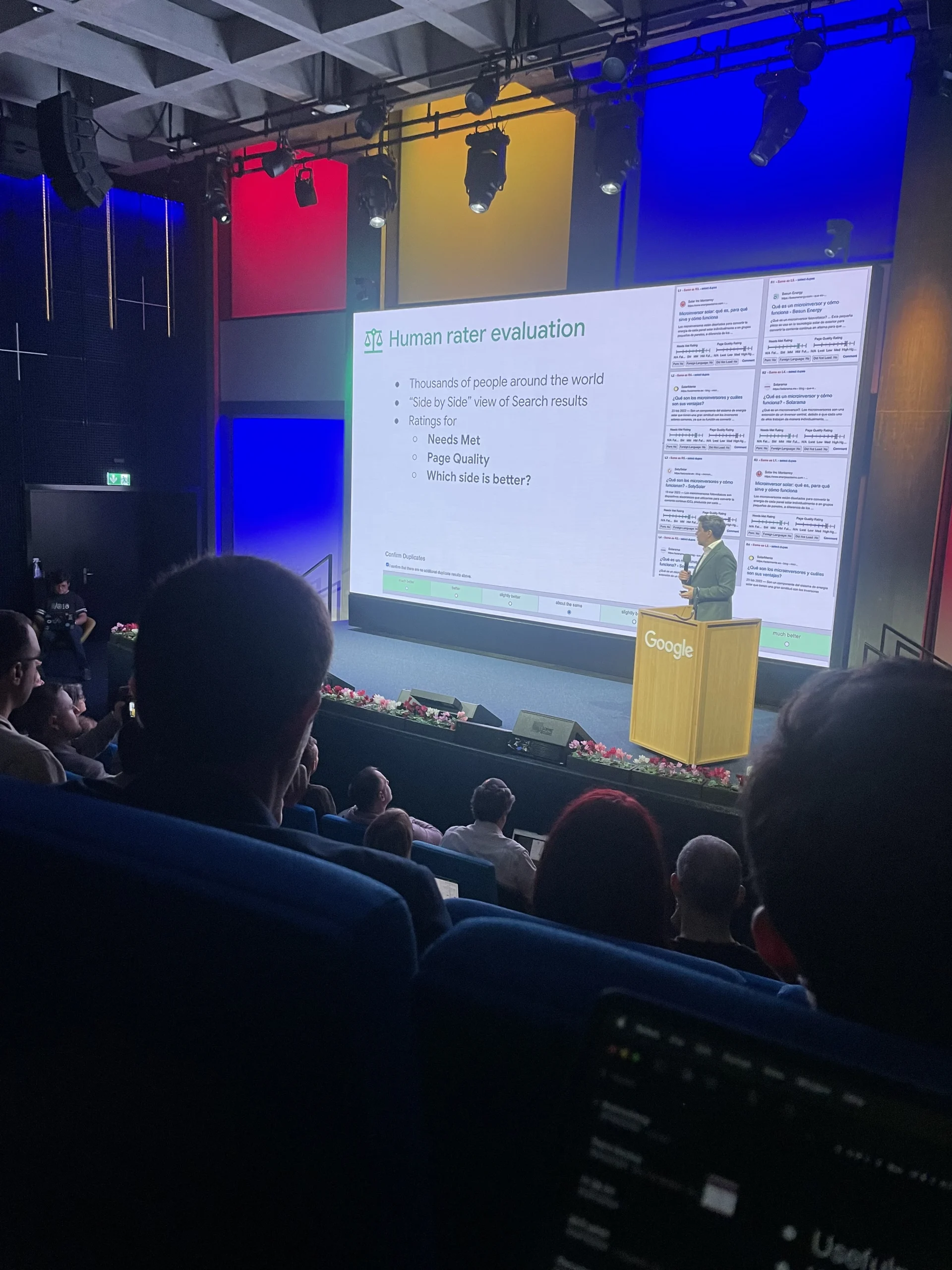

How Google measures the utility ?

John says that this is the awesomeness… what, concretely, does not want to say much 😅

Nicolas, he is more pragmatic. He puts forward :

(a) Human (Raters) : Quality Raters Guidelines

- They compare the results side-by-side and assess if the content responds to the actual needs (Needs Met).

- Read read read they say : https://services.google.com/fh/files/misc/hsw-sqrg.pdf

(b) Algorithms

- Models run in a loop to score the quality scale.

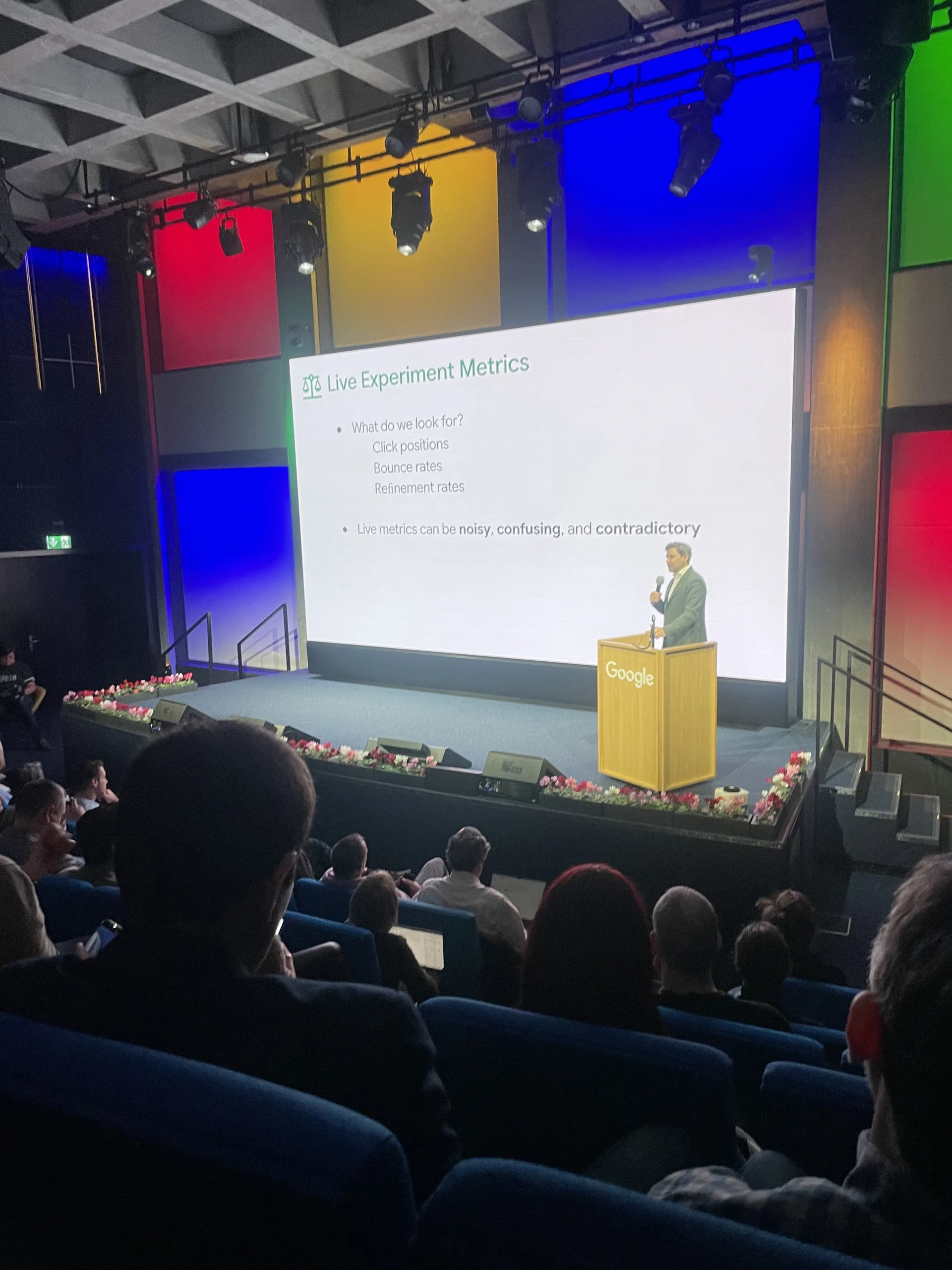

(c) Tests in live / A/B

- Analysis of user behavior : clicks, time on page, pogo-sticking (immediate return back = bad sign). It tells you something ? Navboost ! 😎

(d) SafeSearch

- All clicks are not created equal.

- The clicks to the sensitive content, restricts or prohibits are considered negative.

Tech vs Human : the balance

Google combines :

- Technical Tests

- Guidelines quality

- Satisfaction real users

If a technical modification improves the metrics but frustrates the user, it disappears.

Objective : that the algorithm will eventually think like a human being demanding.

The open web is reduced

- The walled gardens multiply (paid content, apps closed, private community).

- Google must fight for crawler the open content.

- Involvement SEO : content is open, accessible, and quality = rare and precious.

The AI is not a separate engine

- AI (Google) does not clear the fundamentals.

- Same index, same quality criteria.

- A site considered “trash” by the algo classic will not be put forward by the AI.

- The AI deck in what is already identified as reliable and authoritative.

GEO & N-Grams : speak the language of the AI

- Optimize the content for it to be citable and understandable by the AI.

- Analysis of N-grams (sequences of words) to align with the content on the structure that the AI prefers.

- Objective : answers a clear and structured manner, not keyword-stuffing.

Why Site Kit (yet)

- Direct Feedback in WordPress via Search Console and Analytics.

- Allows you to close the loop :

- Publish content

- Check real commitment (clicks, time on page, interactions)

- Correct depending on the actual performance

- Essential to align with the notion of usefulness of Google.

Google Search Central Zurich, details

Search Console query groups

- The query groups are calculated by theAI.

- No correlation with the rankings on the SERPS. What are our data.

- Council Google Insider : you can use regular expressions (regex) to temporarily modify the query groups : add/remove keywords, etc.

Search Console annotations

- 200 annotations property

- Do not put anything confidential in these annotations ? Why ? I don’t know.

Search Console Configurator IA

The new tool Search Console allows you to say in natural language thatit wants to see in Search Console.

- Accepts text

- Accepts regular expressions

- Performance report-only : not to Discover or News

- Precision to double-check : you do not trust blindly the results

Search Console goes back to the canonical URLs

The long tail may be over-represented in Search Console

- Your best query is, perhaps, not so much in reality.

- Tip : compare with Google Trends in order to relativize.

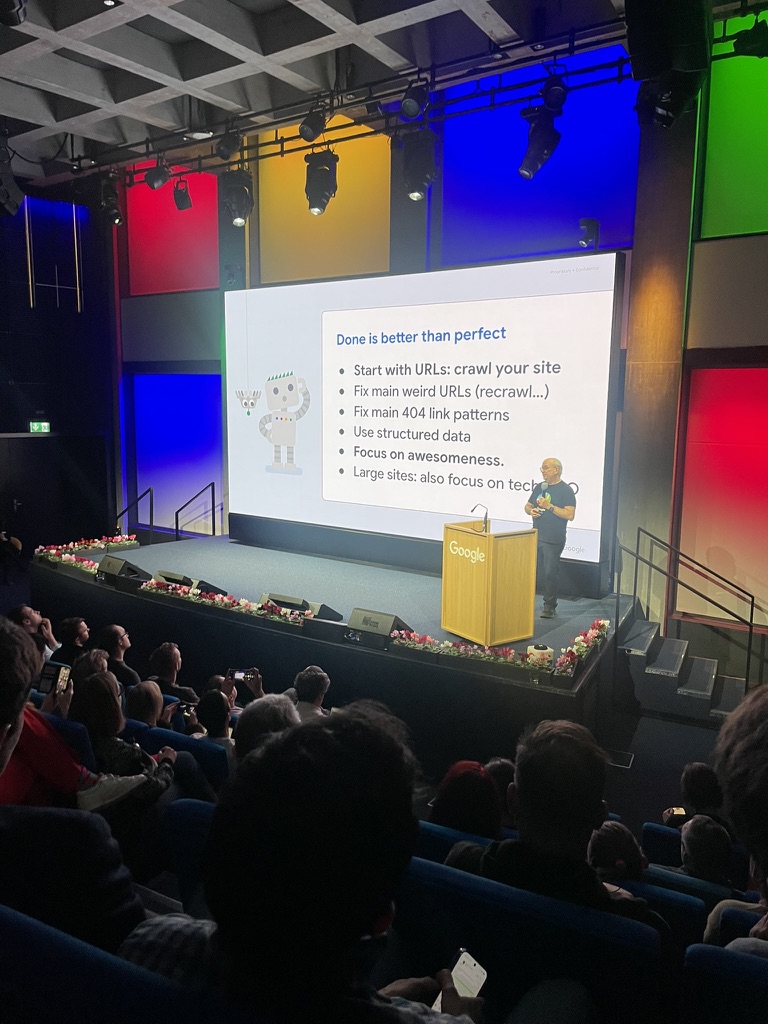

Checklist SEO GOOGLE

If you have always dreamed of a checklist for SEO official with the priorities according to Google…

Good news : your dreams are fulfilled.

More seriously, it is better done than perfect.

- Start with the URL : explore your site.

- Correct the URL for the main unusual (réexplorez…).

- Correct the main models of links 404.

- Use of structured data.

- Focus on theexcellence(quality).

- Great sites : focus on the technology.

Google

Leave a Reply